In the summer of 2020 American intelligence analysts in Afghanistan got a warning from “Raven Sentry”, an artificial-intelligence (AI) tool that they had been operating for a few months. There was a high probability, the AI told them, of a violent attack in Jalalabad, the capital of the eastern Nangarhar province, at the beginning of July. It would probably cause between 20 and 40 casualties. The attack came, a little late, on August 2nd, when Islamic State struck the city’s prison, killing some 29 people.

Raven Sentry had its origins in October 2019, when American forces in Afghanistan were facing a conundrum. They had ever fewer resources, with troop numbers falling, bases closing and intelligence resources being diverted to other parts of the world. Yet violence was rising. The last quarter of 2019 saw the highest level of Taliban attacks in a decade. To address the problem they turned to AI.

Political violence is not random. A paper published in International Organisation by Andrew Shaver and Alexander Bollfrass in 2023 showed, for instance, that high temperatures were correlated with violence in both Afghanistan and Iraq. When days went from 16°C highs to more than 38°C, they observed, “The predicted probability of an Iraqi male expressing support for violence against multinational forces [as measured in opinion polls] increased by tens of percentage points.”

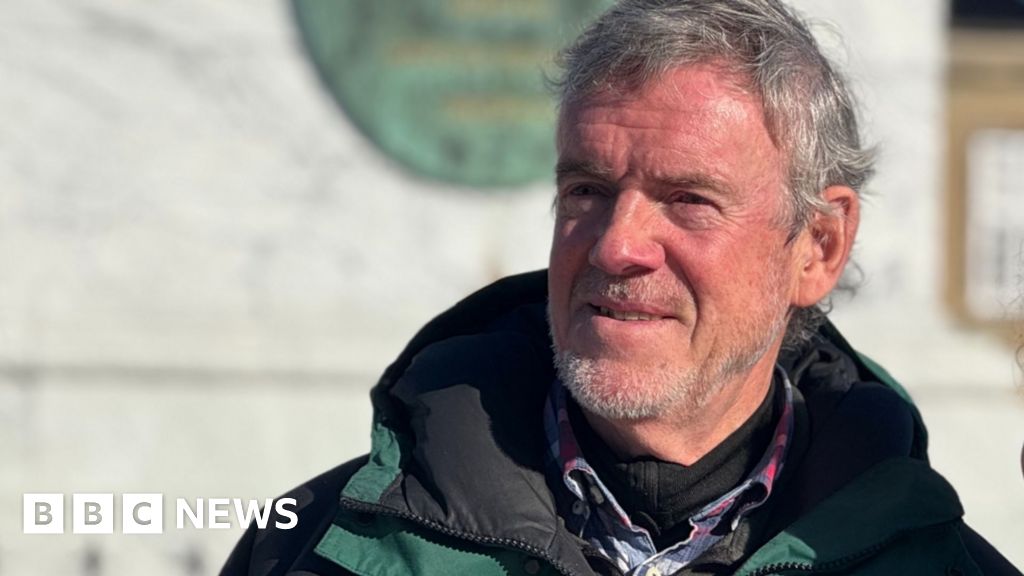

Raven Sentry took this further. A team of American intelligence officers, affectionately dubbed the “nerd locker”, was placed in a special forces unit, the culture of which is better suited to aggressive experimentation. They began by studying “recurring patterns” in insurgent attacks going back to the Soviet occupation of Afghanistan in the 1980s, says Colonel Thomas Spahr, who described the experiment in a recent paper in Parameters, the journal of the US Army War College.

Contractors based in Silicon Valley helped them train a neural network to identify the correlations between historical data on violence and a variety of open (ie, non-secret) sources, including weather data, social-media posts, news reports and commercial satellite images. The resulting model identified when district or provincial centres were at higher risk of attack, and estimated the number of fatalities that might result.

America’s intelligence agencies and the Pentagon bureaucracy were initially sceptical of the effort (they remain tight-lipped about it still). But the results were striking, says Colonel Spahr, who was chief of staff to the top intelligence official at the NATO mission in Afghanistan at the time. By October 2020 the model had achieved 70% accuracy, meaning that if it judged an attack to be likely with the highest probability (80-90%) then an attack subsequently occurred 70% of the time—a level of performance similar to human analysts, he notes, “just at a much higher rate of speed”.

“I was struggling to understand why it was working so well,” says Anshu Roy, the CEO of Rhombus Power, one of the firms involved. “Then we broke it apart, and we realised that the signatures were evident in the towns—they somehow knew.” Optical satellites (those that sense light) would observe towns as a whole becoming darker at night just prior to attacks, he says. However, areas in those towns associated with enemy activity in the past would get brighter. Synthetic aperture radar (SAR) satellites, which send out radar pulses rather than relying on ambient light, would pick up the metallic reflections of heightened vehicle activity. Other satellites would detect higher levels of carbon dioxide, says Mr Roy, though it is not clear why.

Attacks were more likely, it found, when the temperature was above 4°C, lunar illumination was below 30% and it was not raining. “In some cases”, notes Colonel Spahr, “modern attacks occurred in the exact locations, with similar insurgent composition, during the same calendar period, and with identical weapons to their 1980s Russian counterparts.” Raven Sentry was “learning on its own”, says Colonel Spahr, “getting better and better by the time it shut down”. That happened in August 2021 when America pulled out of Afghanistan. By then it had yielded a number of lessons.

Human analysts did not treat its output as gospel. Instead they would use it to cue classified systems, like spy satellites or intercepted communications, to look at an area of concern in more detail. When new intelligence analysts joined the team, they would be carefully taught its weaknesses and limitations. In particular districts, says Mr Roy, the model was not very accurate. “Not much had happened there in the past,” he says, “and no matter how much modelling you do, if you can’t teach the model, there’s not much you can do.”

In the three years since Raven Sentry was shut down, armed forces and intelligence agencies have poured resources into AI for “indicators and warnings”, the term for forewarning of attack. Many of the models have matured relatively recently. “If we’d have had these algorithms in the run-up to the Russian invasion of Ukraine, things would have been much easier,” says a source in British defence intelligence. “There were things we wanted to track that we weren’t very good at tracking at the time.” Four years ago SAR images had a ten-metre resolution, recalls Mr Roy; now it is possible to get images sharp enough to pick out objects smaller than a metre. A model like Raven Sentry, trained on data from Ukraine’s active front lines, “would get very smart very quickly”, he says.

Colonel Spahr says it is not a linear process. “Just as Iraqi insurgents learned that burning tyres in the streets degraded US aircraft optics or as Vietnamese guerrillas dug tunnels to avoid overhead observation, America’s adversaries will learn to trick AI systems and corrupt data inputs,” he says. “The Taliban, after all, prevailed against the United States and NATO’s advanced technology in Afghanistan.”